Interaction Situation

During the interaction, the robot detects a situation that it classifies as corresponding with its own goals. The reaction caused in humans by such situations can be described as happiness and, in social interaction, is often made visible through body language. The robot can use similar means to make its internal state visible to the user. This expression is not so much related to an actual positive feeling of the robot (that – as a machine – is not capable of experiencing emotions). Still, it can be used as a way to make the goals and intentions transparent to the user and thus to make it easier for her to anticipate the robot’s future action. In addition, an expression of happiness might be employed as a means for motivating the user or keeping her entertained.

Solution

Let the robot express that:

Happiness can be expressed through three different communication modalities (in combination or separately):

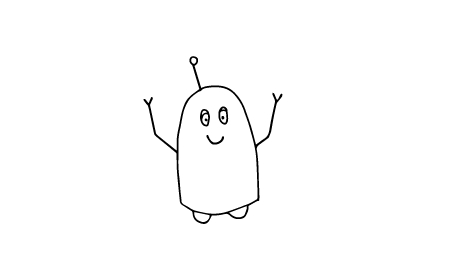

You can let the robot show a dynamic expression such as lifting its arm in joy. If your robot has a tail, you could let it swipe its tail.

You can also let it perform movements on the floor, which should be rather fast-paced to express the energy elicited by happiness. This could be moving from left to right or letting the robot carry out a small dance.

The impression of happiness can be supported by a corresponding facial expression, including a smile.

Sound and light can also be used to express emotions. Still, we recommend to check first, for which other purposes you are planning to use and light and sound. It should be assured that these two communication modalities are consistently used in your robot behavior design for specific purposes and not overly used as an “all-purpose weapon”.

If you want to use light or sound to express happiness, take a look at Joyful anticipation.

Related patterns:

Needed by: Joyful positive feedback, Gloating negative feedback

Is extended by: Joyful anticipation

Examples

Rationale

Research in movement perception and HRI suggest that happiness can be expressed through rather fast, rhythmic movements or rotations, including changes in tempo. Movements on the floor or weight shifts from left to right in a rather fast pace can be used to imitate dancing.

There is also a large body of research that investigates how dynamic body expressions of humanoid robots can be used to express emotions. All these approaches try to imitate human gestures of happiness such as lifting one or both arms in the air or performing a little dance.

References and further reading:

- Yuk, N. S., & Kwon, D. S. (2008, October). Realization of expressive body motion using leg-wheel hybrid mobile robot: KaMERo1. In 2008 International Conference on Control, Automation and Systems(pp. 2350–2355). IEEE. https://doi.org/10.1109/ICCAS.2008.4694198

- Melzer, A., Shafir, T., & Tsachor, R. P. (2019). How do we recognize emotion from movement? Specific motor components contribute to the recognition of each emotion. Frontiers in psychology, 10, 1389. https://doi.org/10.3389/fpsyg.2019.01389

- Camurri, A., Lagerlöf, I., & Volpe, G. (2003). Recognizing emotion from dance movement: comparison of spectator recognition and automated techniques. International journal of human-computer studies, 59(1–2), 213–225. https://doi.org/10.1016/S1071-5819(03)00050-8

- Desai, R., Anderson, F., Matejka, J., Coros, S., McCann, J., Fitzmaurice, G., & Grossman, T. (2019, May). Geppetto: Enabling Semantic Design of Expressive Robot Behaviors. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems(pp. 1–14). https://doi.org/10.1145/3290605.3300599

- Johnson, D. O., Cuijpers, R. H., Pollmann, K., & van de Ven, A. A. (2016). Exploring the entertainment value of playing games with a humanoid robot. International Journal of Social Robotics, 8(2), 247–269. https://doi.org/10.1007/s12369-015-0331-x

- Häring, M., Bee, N., & André, E. (2011, July). Creation and evaluation of emotion expression with body movement, sound and eye color for humanoid robots. In 2011 RO-MAN(pp. 204–209). IEEE. https://doi.org/10.1109/ROMAN.2011.6005263

- Pollmann, K., Tagalidou, N., & Fronemann, N. (2019). It’s in Your Eyes: Which Facial Design is Best Suited to Let a Robot Express Emotions? In Proceedings of Mensch und Computer 2019(pp. 639–642). https://doi.org/10.1145/3340764.3344883