Interaction Situation

Robot and user are engaged in interaction. The robot communicates that its full attention is focused on the user. This pattern should be used in two types of situations:

A) The user is doing or saying something that is relevant for the interaction.

B) The robot is doing or saying something that concerns the user (not the context).

Solution

Let the robot express that:

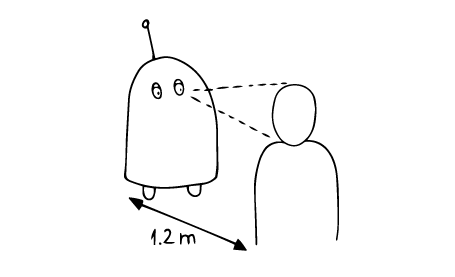

Attentiveness towards the user can be expressed with a user-oriented positioning in the room. Position the robot in social distance from the user (~1.2 m) and let the front of the robot face the user. It should always be clear, where the frontal part of the robot is. If possible, use active user tracking to let the robot maintain eye contact with the user. Eye contact can also be achieved with robots that do not have eyes: in this case the visual sensory organ, the camera, should be pointed at the user and (if possible) follow her head based on user tracking.

Related patterns:

Needed by: Active, Becoming active, Becoming inactive, Processing, Not understanding, Showing, Listening, Explaining, Encouraging good performance, Joyful positive feedback, Displeased positive feedback, Empathic negative feedback, Gloating negative feedback

Opposed patterns: Inside turn, Passively available

Examples

Rationale

In the interaction between different agents, attentiveness can be signaled through coming closer, decreasing the distance between oneself and the other agent. Research in human-robot interaction shows that a robot is perceived as attentive, when its front is facing the user. From social interaction, we have also learned to interpret eye contact as a sign that someone’s attention is on us. Establishing eye contact is used as an unvoiced agreement to engage in social interaction with each other.

These two concepts can easily be transferred to robots, as demonstrated by two examples: In the movie “Robot and Frank” the robot turns towards the user when talking to him. Pixar’s robot Wall-E moves its head closer to objects of interest.

References & further reading:

- Goffman, E. (1963). Behavior in Public Places. Glencoe: The Free Press.

- Jan, D., & Traum, D. R. (2007, May). Dynamic movement and positioning of embodied agents in multiparty conversations. In Proceedings of the 6th international joint conference on Autonomous agents and multiagent systems(pp. 1–3). https://doi.org/10.1145/1329125.1329142

- Cassell, J., & Thorisson, K. R. (1999a). The power of a nod and a glance: Envelope vs. emotional feedback in animated conversational agents. Applied Artificial Intelligence, 13(4–5), 519–538. https://doi.org/10.1080/088395199117360

- Cassell, J., Bickmore, T., Billinghurst, M., Campbell, L., Chang, K., Vilhjálmsson, H., & Yan, H. (1999b, May). Embodiment in conversational interfaces: Rea. In Proceedings of the SIGCHI conference on Human Factors in Computing Systems(pp. 520–527). https://doi.org/10.1145/302979.303150

- Mizoguchi, H., Takagi, K., Hatamura, Y., Nakao, M., & Sato, T. (1997, September). Behavioral expression by an expressive mobile robot-expressing vividness, mental distance, and attention. In Proceedings of the 1997 IEEE/RSJ International Conference on Intelligent Robot and Systems. Innovative Robotics for Real-World Applications. IROS’97(Vol. 1, pp. 306–311). IEEE. https://doi.org/1109/IROS.1997.649070